Introduction:

With your data ingesting and workbooks deployed, we are now ready to start to deploy the collector out via Proactive Remediations in Intune.

This will likely be the final article in this series, at least for now. To be clear, I mean just the Windows Endpoints series for App/Device/Admin Inventory. There are quite a few other collectors which will have their own series lined up to go out after that.

In this section, we will cover…

- Requirements

- Recommendations

- Preparing the Collection Script

- Pushing the Script via Proactive Remediations

- A Tangent on Cost and Run Frequency

- A Tangent on Running on Different Schedules

- Back to the Deployment

- Conclusion

Requirements:

This should be pretty obvious, but you need to have completed the setup article covering the setup and initial data ingestion, as well as gotten data to successfully show inside the tables (step 6). Additionally, you should have your workbooks all setup so you can monitor data as it comes in. If you haven’t gotten that far, you should not be deploying these yet.

As mentioned a few times before now, you need the appropriate Intune Proactive Remediation compliant licensing.

Recommendations:

As I started to write this I realized I had a few important notes and ultimately decided it made more sense to group these up here instead.

1: While you could technically follow these steps to scope the collectors straight to All Devices right off the bat – Please don’t. Please do scope to a test group first. What’s nice about that is you can monitor your ingestion MB, calculate the price if you know your region cost, and do the math of what it costs to scale to a larger group. I tend to do 1%, 10%, 100%.

For those wanting to better understand how to get those cost numbers and do that rollout prediction math, see the below article. It’s worth noting I have workbooks to automate this process, and I do plan to proivde them.

2: For those who run into trouble, there is a section of my Learning Series that covers this process in depth. See below. I am going to link to this article about 500 times in this article.

Preparing the Collection Script:

Before we can deploy the script via Proactive Remediations, we need to take the script you have been manually running to manually send data and make one minor tweak.

In the Variables Region, locate $Delay and change it from $False back to $True. If you are wondering what $Delay is and does, see the section “What is $Delay” here.

Additionally, take note that $CollectDeviceInventory, $CollectAppInventory, and $collectAdminInventory are all set to $True. I will circle back to these later.

The final deployment ready Variables section should look like the below.

Pushing the Script via Proactive Remediations:

Warning: Microsoft loves to move all of these menus around and change how they look. I make an effort to keep the learning series in line with their changes and as such, if you run into issues like missing menus, look for the generic version of this process in the learning series.

First, head into Intune.

As of July 2023, the PR menu is now located under Devices, Remediations.

Choose Create Script Package.

Note: You may need to confirm your tenant licensing if the option is greyed out like. See learning series here for details.

You will then be prompted for a multitude of values. These are the only ones you need to set / change.

- Detection Script File.

This is the data collection script, just browse out to wherever you have saved it. - Run Script in 64-Bit PowerShell

Change this to yes. Without it, you can’t query 64-bit registry locations properly.

We don’t need a remediation script for this, nor do we want to run as user (we want to run as System). Script Signature Check is something you will have to look into on your own and make your own determination.

When the information has been entered which should look similar to the below, hit Next.

If you use Scope Tags, feel free to add them. When done, hit Next.

For groups, you need to select either a user group or device group. I have several notes on this topic.

- Know that if you select a user group, it runs on any device that user logs into, not devices they explicitly own (primary user) and not devices they aren’t logged into actively (from my experience).

- Additionally, if you scope to a user/device group, you can (or should) only exclude a matching group. Don’t scope to devices and try to exclude users.

- Given these are data collection scripts, I would highly recommend scoping to DEVICES as we need these scripts to run regardless of a logged in users presence. I would also again recommend you use a small test group and slowly roll these out.

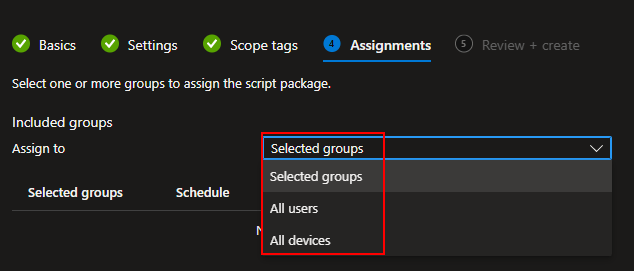

You can deploy to all devices using the upper drop-down Selected Groups box or pick individual groups using the Select Groups to Include hyperlink.

All Devices:

Selected Groups:

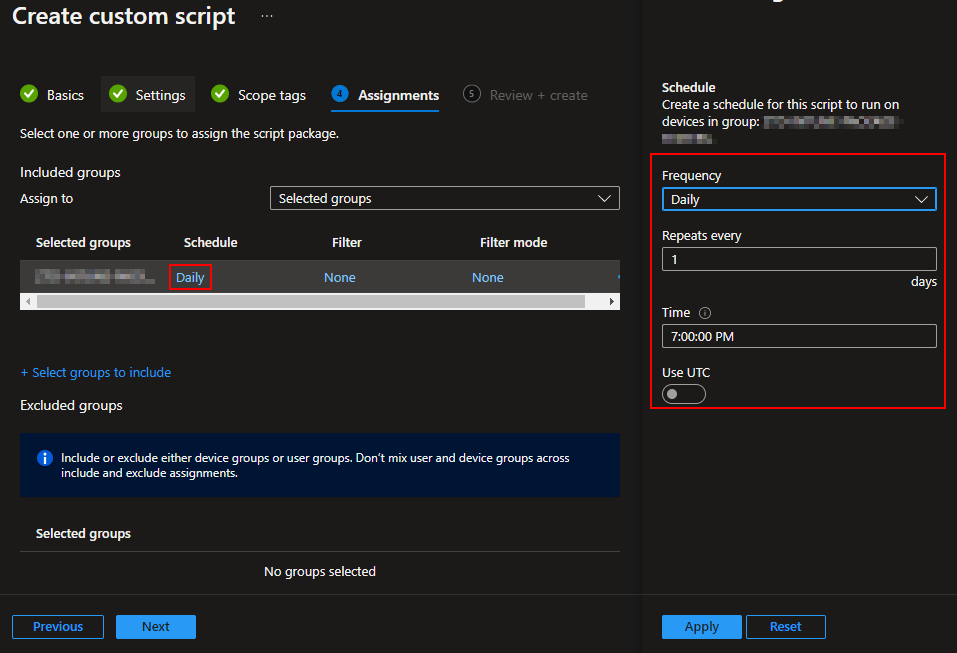

Once a group is selected, you can then alter the schedule for that group. You can put different groups on different schedules. I really would not advise having different schedules on the same singular collector deployment though, it makes understand/explaining how live data is very difficult.

A Tangent on Cost and Run Frequency:

You may be asking yourself how often you should run these scripts. As I mentioned in the cost sections, I run them every four hours. That means, given devices are online, data is at most 4 hours + 50 minutes (due to $delay) behind “live.” Being behind live doesn’t mean your data is wrong, it just might be. If you want to run them more freuqneltly, say hourly, that would cost 4x as much because you would run them 4x as often and then pull in 4x as much data.

There are in depth explanations of this on the cost articles from this series, see the Log Analytics Index under the Log Analytics for Windows Endpoints 1.0 section. I also have a section by the same header which discus’s similar concepts in the learning series here.

A Tangent on Running on Different Schedules:

I touched on this briefly in a prior article and now it’s finally time to circle back on that. Again, as all three tables are controlled by one collector script, you have to do a little work to make this happen. However, it’s very simple.

You just need to make copies of your scripts, say 3 if you want to run each collector on a different schedule, then you just need to turn on/off combinations of $CollectDeviceInventory, $CollectAppInventory, and $collectAdminInventory.

For instance, if you want a collector that just does one of each that way you can run each component on a different schedule, you would make three copies of the working script and turn off two of the three collectors in each one. Thus, you now have three scripts, one for each collector, and can deploy them as three separate PRs on three different schedules.

Back to the Deployment:

Once you have your groups and schedules figured out, you can go ahead and hit Next and Create.

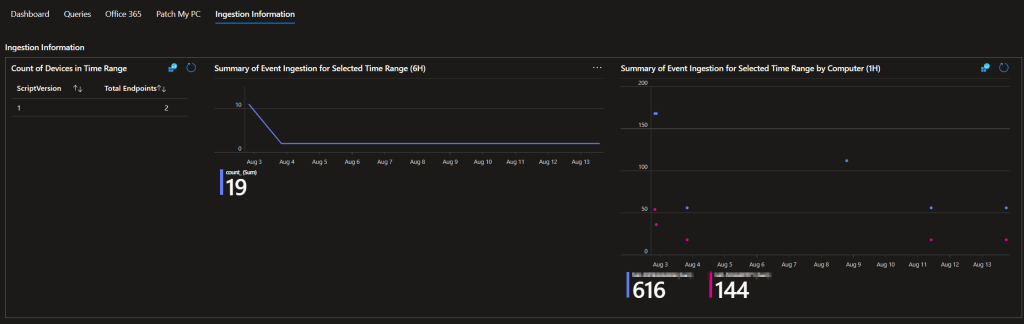

You can then use the handy-dandy Ingestion Information pages (example below) in the workbooks to monitor how many devices are sending in data, how much data they are sending, etc. This should make a nice upward trend as more and more devices pickup the assignment and begin their schedule.

That said, I have found that the deployment of Proactive Remediations are PAINFULLY slow. Even if you tell it to run hourly and scope to thousands of devices, I would expect only 10% of your scoped population to have checked in within 3 hours.

So, if you scoped to 30 devices on an hourly schedule, 3 hours later you might have 3 new devices in the log. If you scoped to 10,000 devices on an hourly schedule, 3 hours later you might have only 1,000 devices. If it’s every X hour, keep in mind devices might not run it at all until they reach a UTC multiple of that time.

All in all – these are just not fast to go out.

Conclusion:

Given some time, you should have some new devices sending in data. let it run for a week, do the scaling math, roll it out to the next group, and so on down the line until you are out to 100%.

This does conclude my planned segments of this series – thank you for your interest and happy data mining!

The Next Steps:

Again, this is the final planned article in this series, but there are more collectors and workbooks to come!

See the index page for all new updates!

Log Analytics Index – Getting the Most Out of Azure (azuretothemax.net)

I will be putting the Windows Endpoint guides on the Log Analytics Index page under the Win365 series.

Disclaimer:

The following is the disclaimer that applies to all scripts, functions, one-liners, setup examples, documentation, etc. This disclaimer supersedes any disclaimer included in any script, function, one-liner, article, post, etc.

You running this script/function or following the setup example(s) means you will not blame the author(s) if this breaks your stuff. This script/function/setup-example is provided AS IS without warranty of any kind. Author(s) disclaim all implied warranties including, without limitation, any implied warranties of merchantability or of fitness for a particular purpose. The entire risk arising out of the use or performance of the sample scripts and documentation remains with you. In no event shall author(s) be held liable for any damages whatsoever (including, without limitation, damages for loss of business profits, business interruption, loss of business information, or other pecuniary loss) arising out of the use of or inability to use the script or documentation. Neither this script/function/example/documentation, nor any part of it other than those parts that are explicitly copied from others, may be republished without author(s) express written permission. Author(s) retain the right to alter this disclaimer at any time.

It is entirely up to you and/or your business to understand and evaluate the full direct and indirect consequences of using one of these examples or following this documentation.

The latest version of this disclaimer can be found at: https://azuretothemax.net/disclaimer/